I am #self-hosting my own #Mastodon instance in a #RaspberryPi 4 under #Docker. With the arrival of the new #RaspberryPi5, I wanted to try a full migration, installing the system from scratch and performing a backup and restore, also to understand how all of this works.

Here I intend to describe my journey, a full step-by-step walk through!

Overview

This is a quite long article, as I tried to be very explicit. I subdivided it in the following sections:

- Goal, requirements and assumptions

- Install Docker & docker-compose

- Get the Mastodon code

- Generate the image

- Backup the previous instance

- Restore the backup from the previous instance

- Let's hit the button!

- Wrapping up

- References

0. Goal, requirements and assumptions

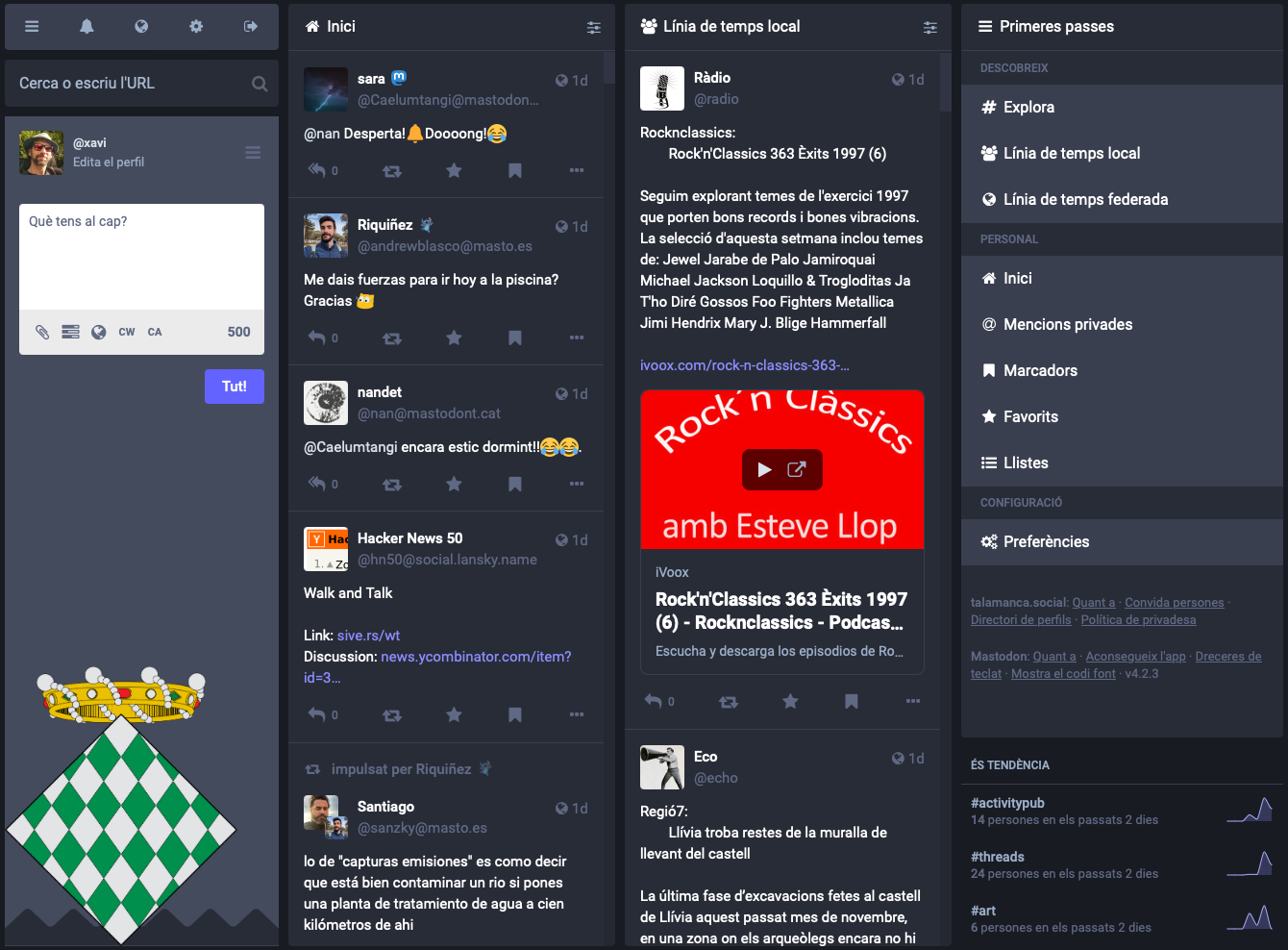

So I have this Mastodon instance running under Docker in a Raspberry Pi 4. I recently upgraded it to the last version v4.2.3 and works great. It's worth to mention that to improve the performance it also boots from a SSD SATA III disk and serves the media files from an external CDN.

0.1. The Goal

That's easy: I want to "move" my instance to the Raspberry Pi 5 that I just received. But with move I don't mean to move the docker volumes between machines, I'd like to do a bit of cleanup by installing the new machine from scratch and install the support software new, so I can benefit from the Debian Bookworm and newer versions in general. This means also to front a backup and restore of the Mastodon's Postgres DB, something I never did and seems like there is not a lot of documentation online to do it between instances under Docker.

0.2. The high level approach

So how do we do that? The overall idea is to install a brand new Mastodon instance and, before running it for the first time, when it should settle the DB, we restore the old DB structure and the environment variables. This way the new installation finds everything already set up and proceeds as the data would belong to it. Then we change the reverse proxy to point the traffic to the new instance and done.

We have Docker in the origin set up and we'll have also Docker in the target one. This means a bit of extra work when we deal with the code and the DB, as they live inside their Docker containers, but one gets eventually used to work like this.

When it comes to the Mastodon instance code itself, we need to identify if we manage customisations or not. If we don't change anything on the code (same logos, same icons, ...) then most of the work is way simpler and the process is straight forward. If we do have customisations, we need to think a way to merge them with the code (and again on every version upgrade). In this article I mention both existing approaches: having the changes on top of the original cloned repository and having the original code forked into a repository that we own where we merge our changes. In both cases a new docker image needs to be built, and we'll do so here.

0.3. Requirements

Of course, a part of the current set up, I need:

-

A Raspberry Pi 5

-

A SSD SATA III disk with a SATA to USB 3 dongle. Why? Because in the current Raspberry Pi 4 installation I have exactly the same set up to avoid killing the MicroSD and it showed a better overall performance, so I want to keep the setup.

I was thinking about reusing the disk and dongle that are currently attached to the Raspberry Pi 4, but it would mean that I have to erase the disk first, loosing the ability to come back to the old setup in case this migration goes wrong. And the cost is quite contained, around 20 euros all together.

0.4. Assumptions

-

The Raspberry Pi 5 is set up based on the article Spawning a Raspberry Pi 5 with Raspberry Pi OS in a SSD SATA III disk, leaving us a system with a ready-to-play Bookworm with static IP and SSH access, and in this special case also booting from a SSD disk. Yay!

-

The Reverse Proxy lives in a separated host called

dagobah, as explained in this article: Set up a Reverse Proxy in a Raspberry Pi with Apache -

I use a local DNS server also in

dagobah, as explained in this article: Quick DNS server on a Raspberry Pi, so I refer to this new Raspberry Pi 5 by its hostnamejakku. You may want to replace it with your host's IP everywhere in the article. -

The user is

xavi. You may want to replace it with you user. -

The Raspberry Pi 4 from which I'm migrating is called

jakku_old, samexaviuser. -

There is another host that holds the backups called

alderaan, samexaviuser. -

I have the hosts pre-authorised between them through their keys, as explained in this article: Set up the SSH Key authentication between hosts, so I don't get asked for the password when I interoperate between them.

-

The domain talamanca.social is already bringing us traffic to our external IP, so I will skip the DNS and the router setup.

-

The old Mastodon instance is already upgraded to the last state. Having the old instance up-to-date easy the things as the new installation will match versions and moving data should be easier.

So, let's jump directly to the newly prepared Raspberry Pi 5!

1. Install Docker & docker-compose

Docker will allow us to run all the needed infrastructure isolated from the machine itself. It allows us to spin up any infrastructure without having an amount of components installed in our system, making it really easy to keep the system up-to-date and to repurpose the Raspberry Pi if needed.

docker-compose is a wrapping tool that makes our live with Docker easier. We'll install both.

1.1. Install Docker

-

ssh

jakkussh xavi@jakku -

Download and install Docker

curl -sSL https://get.docker.com | sh -

Add the current user into the

dockergroupsudo usermod -aG docker $USER -

Exit the session and ssh in again.

-

Test that it works with a hello world container:

$ docker run hello-world Unable to find image 'hello-world:latest' locally latest: Pulling from library/hello-world 70f5ac315c5a: Pull complete Digest: sha256:c79d06dfdfd3d3eb04cafd0dc2bacab0992ebc243e083cabe208bac4dd7759e0 Status: Downloaded newer image for hello-world:latest Hello from Docker! This message shows that your installation appears to be working correctly. To generate this message, Docker took the following steps: 1. The Docker client contacted the Docker daemon. 2. The Docker daemon pulled the "hello-world" image from the Docker Hub. (arm64v8) 3. The Docker daemon created a new container from that image which runs the executable that produces the output you are currently reading. 4. The Docker daemon streamed that output to the Docker client, which sent it to your terminal. To try something more ambitious, you can run an Ubuntu container with: $ docker run -it ubuntu bash Share images, automate workflows, and more with a free Docker ID: https://hub.docker.com/ For more examples and ideas, visit: https://docs.docker.com/get-started/ -

Get the container ID of this test run. In my case is

1dfdb4d1a3cf$ docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 1dfdb4d1a3cf hello-world "/hello" 2 minutes ago Exited (0) 2 minutes ago stupefied_hofstadter -

Remove the test container

docker rm 1dfdb4d1a3cf

1.2. Install docker-compose

-

First of all, check what is the latest version by navigating to their releases: Releases · docker/compose · GitHub. At the moment of this article, the latest stable release was v2.23.3

-

Dowload the right binary from their releases into the binaries directory of our system. Pay attention to the versions in the following command:

sudo curl -L "https://github.com/docker/compose/releases/download/v2.23.3/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose -

Give executable permissions to the downloaded file

sudo chmod +x /usr/local/bin/docker-compose -

Test the installation:

docker-compose --versionIt should print something like

Docker Compose version v2.23.3

2. Get the Mastodon code

⚠️ Do you have customisations?

My instance is slightly customised, meaning that I have some icons and images that I maintain changed through the upgrades.

If you don't have customisations, your process is pretty simple: get the official mastodon code from GitHub and checkout the last version. I usually clone the code in a directory of the user's home:

-

Move yourself to your home, if you're not there already

cd ~ -

Clone the official Mastodon code in a directory called

talamanca.social(yeah, just the domain name, you can call it whatever you want)git clone https://github.com/mastodon/mastodon.git talamanca.social -

Now move to the new directory

cd talamanca.social -

Lastly, we want to ensure that we publish the last released version (at this time it's the v4.2.3) fetching the info from the server and checking out into the right tagged version:

git fetch && git checkout v4.2.3

And that's it. With this you're actually ready for the next step.

Yes, I have customisations

If you, like me, have your instance customised, this set up above is a bit rough, as you need to maintain the customisations uncommited here, and every time that a new version appears, you need to git stash and git stash pop them, and cross fingers that nothing went bad.

Also remember, in the moment that you customise an instance under Docker, you need to build yes or yes a new Docker image, the official one does not help you anymore.

This is actually the approach I've been using until now, and you can get an idea of what an upgrade looks like, including the image build, by reading this article: Build a customized Docker image for Mastodon 4.2.0 in a Raspberry Pi.

If you followed this route, you can now safely jump to the Section 4. Backup from the previous instance.

In the next sections I explain another approach to handle customisations.

2.1. Let's fork and customise

Another way to keep your instance customised while getting the new versions from the original code is to fork the original repository into our account. This action will bring a new repository under our control with a mirrored code from the original source where we can apply all customisations that we want and commit & push them to ensure we don't loose anything in case of a catastrophe.

What is this about?

We'll rely in a functionality that GitHub provides: Syncing a fork. I would actually recommend you to read the whole Working with forks - GitHub Docs documentation.

ℹ️ I already tried this in another project, but in this case the Mastodon team delivers a bigger amount and more structured changes, and therefore I also evolved the strategy a bit more so that I can better work with tagged versions.

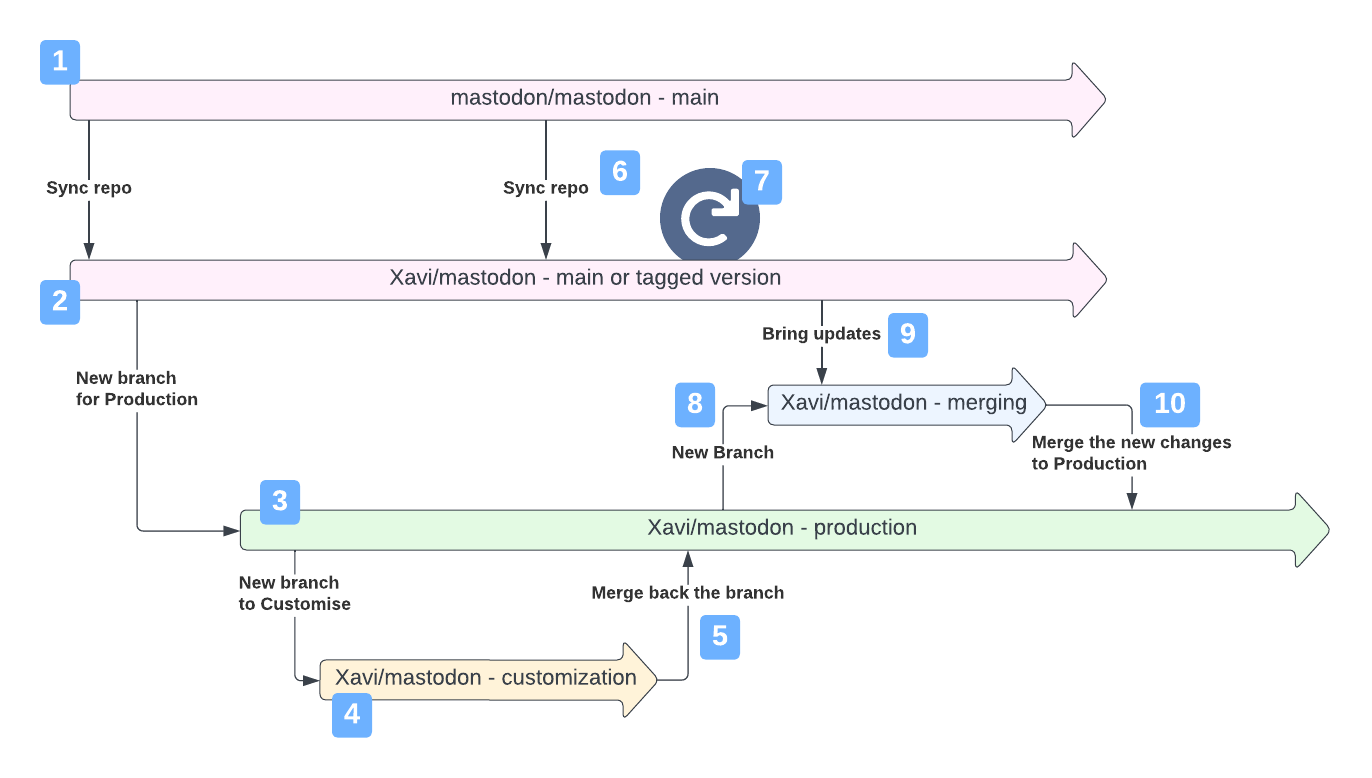

In a nutshell, what we want is to have our main branch as a mirror of the original repo (to serve as the incoming route for the original code) and maintain a different branch for Production (where we have merged the original code with our customized code). Let's take a look at the following diagram:

-

This is the original repository. We don't want to touch it.

-

This is our forked project in the

mainbranch, and should act as a local mirror of the original code. Once it's synch and properly configured in our local, we can navigate through the tagged versions. Let's say that here we do agit checkout v4.2.3, so our forked repo is pinned to this latest version. -

We create a new

productionbranch from our forked repo and taged version. This will behave as the branch checked out in the host serving the application. -

Then we create a new branch for every

customisationwe want to apply, and as it comes from ourproductionit also comes with the latest changes we have already integrated. Now let's say that we have finished customising. -

We merge this

customisationbranch into theproductionbranch. At this point theproductionwill contain all the original code on the tagged version plus the customisations. Here is when you want to build a new Docker image, as it will contain all new code and customisations together. Yay! New customized and latest code! -

Now we identify that Mastodon tagged a new version. Ok, we need to sync the repos to bring this code to our forked repository. This means that the

mainbranch is in sync. -

And now localy in our forked repo we checkout the new version, let's say the v4.2.4 with

git checkout v4.2.4. Great, now we have the new code with us, but still unmerged into theproductionbranch. -

So what we want is to ensure a smooth merge into

production, so before attempting to merge the new code we create a newmergingbranch from theproductionbranch so we can play securely. -

Then we merge the new code from our forked checked out

v4.2.4branchmain. We may have to resolve conflicts and amend broken customisations. We don't have any stress, as it is just a branch that won't affect the final state of the code. We could even build a temporary image and run it locally, to see if the instance runs smooth. Let's say that we solved all conflicts and everything looks good in themergingbranch. -

Now that the

mergingis ready we proceed to merge it into theproductionbranch. Because the work we did in the previous point, this merge is going to be smooth. And finally, here is where we ended up again with the latest code and the customisations well integrated, so we'll create a new Docker image from it. Yay! New customized and latest code one more time!

So once we saw the main idea, let's go deep into the points:

2.2. Fork the project and get the code at the latest version

That is pretty simple. Once we're logged in into our GitHub account,

-

Navigate to the Mastodon's GitHub repository: https://github.com/mastodon/mastodon

-

Click the button

Fork, that is placed in the top right area of the screen between the buttonsWatchandStar. It will follow up with some more questions regarding your new forked repository in your account.

Let's say that it got forked into my account, as a repository called XaviArnaus/mastodon. Now we want to clone it in our local system:

-

Move yourself to where you want to have it cloned. As explained above, I tend to clone the projects as a subdirectory of home.

cd ~ -

Clone the repository into a directory named after the domain. Remember that we want our forked one!

git clone git@github.com:XaviArnaus/mastodon.git talamanca.socialMy forked repository had

originpointing to my forked repository and in no way pointing to the original source, and I am not sure if this is the standard state. This made that I couldn't get a real sinchronisation to a version tag. We need to have defined the 2 remotes, so we can get the the tags from the original code AND be able to push changes to our repository:-

originpointing to the original Mastodon repository -

upstreampointing to my forked Mastodon repository

I fixed it by renaming the current remote and adding a new one:

-

-

Rename the remote

origintoupstream, because is where we want to send our code to be safegit remote rename origin upstream -

Add a new remote

originthat will point to the original repository, so we can get the tags from the original repositorygit remote add origin https://github.com/mastodon/mastodon.git -

And now we get all the tags in both remotes

git fetch --allWe should get a really long list of tags coming basically from

origin -

Now we checkout into the last Mastodon version

v4.2.3git checkout v4.2.3

And with this we have our forked repository up and in sync with the original code.

2.3. Prepare our Production branch

So what we want is to have a production branch where we'll centralise the versioned code from the original repository AND our customisations.

And just to repeat, we start from our forked 'main' branch that we already have checked out to the latest tagged version.

-

We generate a new

production/talamanca.social.git checkout -b production/talamanca.social -

And before anything else, let's push this branch so we register it in our remote (so it will appear already available in our first Pull Request later on). Note that I am defining

upstreamas the remote that I want to push this changes to.git push upstreamIt will ask for a Pull Request, but as long as this branch will never be merged into

main, then we can ignore it.

2.4. Customise it!

Now we want to apply our customisations. As I explained above, I previously had a set of changes jumping around in the cloned repository that I had to git stash and git stash pop in every upgrade.

So what I did is to copy them into my backup server (called here alderaan) and now we'll apply them here as a sort of example on customising a Mastodon instance:

-

All work starts by creating a new branch out of our current "last good version" in the

productionbranch, that we'll use for our first customisation:git checkout -b customisations/favicons -

And now I'm going to copy over all the files that customise my favicons from the remote backup location:

scp alderaan:/home/xavi/instance_backups/talamanca.social/customisations/public/favicon.ico public/. scp alderaan:/home/xavi/instance_backups/talamanca.social/customisations/app/views/layouts/application.html.haml app/views/layouts/. scp alderaan:/home/xavi/instance_backups/talamanca.social/customisations/app/javascript/images/logo-symbol-icon.svg app/javascript/images/. scp alderaan:/home/xavi/instance_backups/talamanca.social/customisations/app/javascript/icons/favicon-* app/javascript/icons/. scp alderaan:/home/xavi/instance_backups/talamanca.social/customisations/app/javascript/icons/apple-touch-icon-* app/javascript/icons/. scp alderaan:/home/xavi/instance_backups/talamanca.social/customisations/app/javascript/icons/android-chrome* app/javascript/icons/. -

Commit the changes and push them to our forked repository

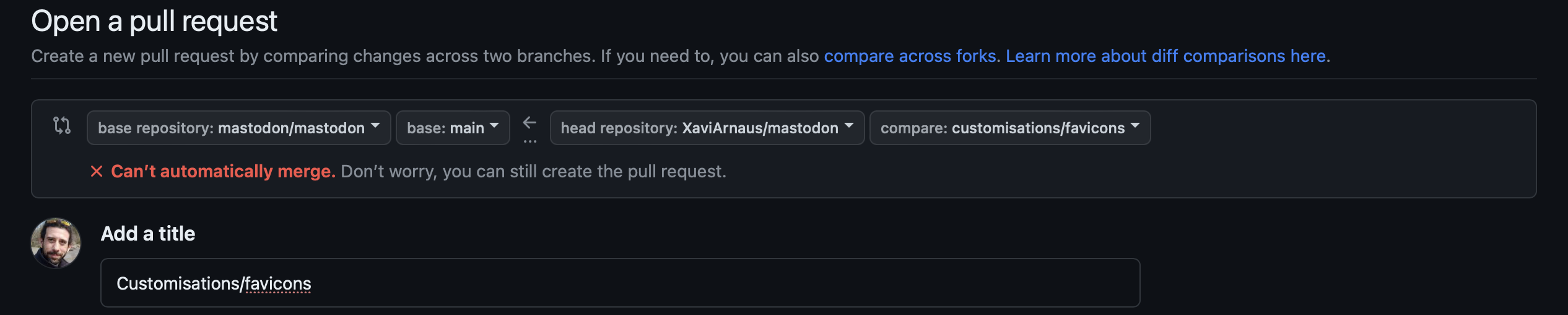

git add . git commit -m "Android, Apple, generic and masked favicons" git push upstreamOf course, it requests to generate a Pull Request. I personally like to do so, so I can control one last time what is going to be merged, and also merge it in a more visual way. It then also gets registered in GitHub, so I can come later and vew changes and the description I entered.

The problem with the Pull Request is that GitHub intends to point to the original repository, but we want to merge into OUR repository, in the

production/talamanca.socialbranch, so we have to change both from:

pay attention: the repository is the original mastodon/mastodon one, in their 'main' branch.

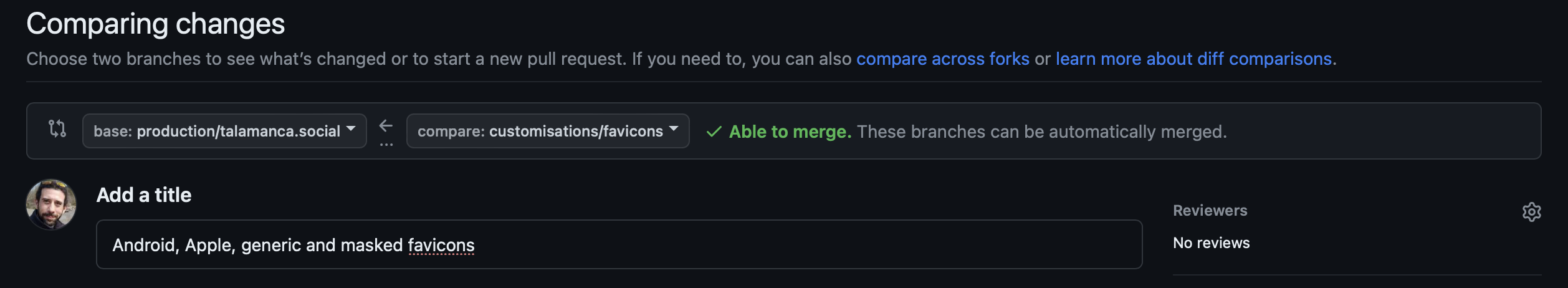

to:

pay attention: the repository is our forked XaviArnaus/mastodon, in our

production/talamanca.socialbranch.And then we'll be able to review, merge the code and finally delete the now useless

customisations/faviconsbranch. -

In the local shell, move yourself to the

production/talamanca.socialbranch and get the new customised and merged codegit checkout production/talamanca.social git pull upstream production/talamanca.socialActually, appeared a warning saying that this

production/talamanca.socialbranch is not binded to any remote one. We can set it up so that we ensure that our localproduction/talamanca.socialrepresents the Upstream'sproduction/talamanca.socialgit branch --set-upstream-to=upstream/production/talamanca.social production/talamanca.social

And with this we have the production branch with the customised favicons already integrated into the latest code. YAY!

Just for the record, I'm going to customise a bit more, now a here in one block:

git checkout -b customisations/others-from-initial

scp alderaan:/home/xavi/instance_backups/talamanca.social/customisations/public/robots.txt public/.

scp alderaan:/home/xavi/instance_backups/talamanca.social/customisations/app/javascript/images/preview.png app/javascript/images/.

scp alderaan:/home/xavi/instance_backups/talamanca.social/customisations/docker-compose.yml .

git add app/

git commit -m "Add Preview image"

git add public/

git commit -m "Add Robots"

git add docker-compose.yml

git commit -m "Allow traffic from all sources, not only localhost"

git push upstream... and the same correction on the Pull Request and where it should be merged to. Then double-check the changes, squash and merge, and finally we can delete the branch.

3. Generate the image

So here is the thing: as long as you change a single thing, a new docker image needs to be built (unless you want to enter into the image and change it every time you restart the service). Therefore, let's generate the image.

docker build . -t mastodon:20231210 -f DockerfileIt will take a while, but in this Raspberry Pi 5 feels fast!

Once finished, we would be able to use this built image right away, but following the same concept of trying to get everything out from the Rapsberry Pi for security reasons, feels natural to push this image to the Docker Hub artifactory (where we have already an account, right?)

So:

-

Login to Docker hub

docker login -

Retag the image so it fits in the artifactory format

docker tag mastodon:20231210 arnaus/mastodon:talamanca_rpi5_4.2.3 -

Push the image

docker push arnaus/mastodon:talamanca_rpi5_4.2.3

And finally, we need to remember to apply this new image into the docker-compose.yml file, so they are used when we start the containers!

nano docker-compose.ymlThere we have to replace all 3 occurrences of the definition of the official docker image ghcr.io/mastodon/mastodon:v4.2.3 (so for the container web, sidekiq and stream) by the one that we just built arnaus/mastodon:talamanca_rpi5_4.2.3. When you're done, save (control + o) and exit (control + x).

Now we would be ready to start our Mastodon instance. It's up-to-date and contains our customisations integrated. If you're following this guide to build a brand new instance, you could now ignore the next section 4.

4. Backup the previous instance

Let's face now the backup of the previous instance. Just as a reminder, the jakku_old Raspberry Pi 4 is still up and running under Docker. We have a host called alderaan with a small directory structure ready to accommodate whatever we bring as backup.

We waited until the very last moment as everything that happens between the backup and the restoring will be a blacklout for the instance: no posts, no notifications, no new followers... So it's in our interests to spend the less time possible offline.

According to the official documentation (Backing up your server - Mastodon documentation), I want to backup the Postgres and the environment file.

What about the media? I am using an external CDN, a Storage Object in DigitalOcean that allow me to unstress the little Raspberry Pi, my home internet connection, and also easies a lot this migration.

4.1. Backup the Postgres DB

To backup the data in the DB we need to have all services down that can possibly write there. So, once logged into jakku_old,

-

First of all stop the

web,sidekiq, andstreamingservicesdocker stop mastodon-streaming-1 && docker stop mastodon-sidekiq-1 && docker stop mastodon-web-1 -

Trigger the backup

docker exec mastodon-db-1 pg_dumpall -U postgres | gzip > postgres_backup_`date +'%Y%m%d'`.sql.gz -

Start again the services. This point makes sense if we're doing a continuous backup strategy, but as long as this old instance will die once the new one is up and running, we can ignore this step.

docker compose up -d -

Copy the backup file to the remote location

scp postgres_backup_20231211.sql.gz alderaan:/home/xavi/instance_backups/talamanca.social/database/.

4.2. Backup the Environment vars

The environment variables are the ones defining several infrastructural topics of the instance. For example, here is mine:

LOCAL_DOMAIN=talamanca.social

SINGLE_USER_MODE=false

SECRET_KEY_BASE=1234567890abcdef

OTP_SECRET=1234567890abcdef

# Push notification

VAPID_PRIVATE_KEY=1234567890abcdef

VAPID_PUBLIC_KEY=1234567890abcdef

# DB

DB_HOST=db

DB_PORT=5432

DB_NAME=mastodon_production

DB_USER=mastodon

DB_PASS=super-secret-pass

# Redis

REDIS_HOST=redis

REDIS_PORT=6379

REDIS_PASSWORD=super-secret-pass

# Mail

SMTP_SERVER=smtp.eu.mailgun.org

SMTP_PORT=587

SMTP_LOGIN=super-secret-login

SMTP_PASSWORD=super-secret-pass

SMTP_AUTH_METHOD=plain

SMTP_OPENSSL_VERIFY_MODE=none

SMTP_FROM_ADDRESS='Mastodon <notifications@talamanca.social>'

# XA:

DEFAULT_LOCALE=ca # Catala per defecte

# External CDN

S3_ENABLED=true

S3_PROTOCOL=https

S3_BUCKET=statics

S3_REGION=fra1

S3_HOSTNAME=cdn.devnamic.com

S3_ENDPOINT=https://fra1.digitaloceanspaces.com

S3_ALIAS_HOST=cdn.devnamic.com

AWS_ACCESS_KEY_ID=1234567890abcdef

AWS_SECRET_ACCESS_KEY=1234567890abcdef-

Copy the environment file to the remote location

scp .env.production alderaan:/home/xavi/instance_backups/talamanca.social/environment/.

5. Restore the backup from the previous instance

And now we take the backups from the remote alderaan and bring them to jakku.

First of all, once we're in jakku, just ensure we're in the instance's directory

cd ~/talamanca.socialWe assume that the instance is not running yet (just in case if you're jumping sections from the article until here), otherwise stop everything with docker compose down

Also, I need to note that the order restoring is important: the docker containers need the environment variables, so first restore the .env.production and then restore the db.

5.1. Restore the environment vars

-

Copy the environment file from the remote location directly into the expected target

scp alderaan:/home/xavi/instance_backups/talamanca.social/environment/.env.production .

5.2. Restore the Postgress DB

-

Copy the backup file from the remote location

scp alderaan:/home/xavi/instance_backups/talamanca.social/database/postgres_backup_20231211.sql.gz . -

To restore the data we need a running Postgres, so we start its container

docker compose up db -d -

Copy the backup file into the

postgrescontainerdocker cp postgres_backup_20231211.sql.gz db:/backup.sql.gz -

Log into the container

docker exec -it talamancasocial-db-1 bash

Because we don't have aything yet, restoring the DB should not give any problem. The user mastodon that we intend to create and the database mastodon_production do not yet exists, so everything should go smooth (the backup will try to create them). You can safely skip the next section.

Double-check that the DB is empty and what to do otherwise

Let me just bring some more optional steps to double-check that the DB is empty:

-

Log into the Postgres DB

psql -U postgres -

Show the databases we have at this point

\las we still did not run the mastodon app yet, we did not run the set up of the DB, meaning that we should have a clean one and the output should be like:

List of databases Name | Owner | Encoding | Collate | Ctype | Access privileges -----------+----------+----------+------------+------------+----------------------- postgres | postgres | UTF8 | en_US.utf8 | en_US.utf8 | template0 | postgres | UTF8 | en_US.utf8 | en_US.utf8 | =c/postgres + | | | | | postgres=CTc/postgres template1 | postgres | UTF8 | en_US.utf8 | en_US.utf8 | =c/postgres + | | | | | postgres=CTc/postgres (3 rows)If you're restoring over an already existing DB and User, you maybe want to clean it first by deleting the current database and removing the user.

The user that the installation uses is

mastodonand the database created ismastodon_production, and also can be defined in the environment file. Now that we're logged into Postgres, we could do:DROP DATABASE mastodon_production; DROP ROLE mastodon;We can also do it by using the binaries that live in the container (meaning, without the need of logging into the Postgres). So, once we

exitfrom our Postgress session we do:dropdb mastodon_production && dropuser mastodon

Let's continue now with the restoration, we're still logged inside the DB container, right?:

-

Load the backup into the postgres

gunzip < backup.sql.gz | psql -U postgresA long list of actions appear printed in the screen. We just wait until everything is done. I did not receive any warning nor error here.

-

Now we can remove the backup file from inside the container to save some space

rm backup.sql.gz -

And finally log out from the container

exit -

Last step is to bring down the DB instance

docker compose down db

6. Let's hit the button!

So we're all set, it's time to see if all these steps work all together!

⚠️ Disclaimer

I am migrating from an already existing instance. That's why there is some work that I am explicitly ignoring: the DNS and the SSL set up.

As we already have a domain pointing to our external IP, the DNS setup can be ignored because we only change the host behind the Reverse Proxy.

The same happens with the SSL, as in my case the certificate is set up in the Reverse Proxy and the communication from it to the instance goes unencrypted internally.

Therefore, if you're setting up a new instance or you don't have a Reverse Proxy where all your traffic is hitting, you most likely need to set it up on your own.

6.1. Start the docker containers

Regardless if we just got the official code or we built our own images, we have now a system ready to be spin up. So we do:

docker compose up -dIt will pull the images that it does not have yet in the system and spin up the containers. We can also monitor the logs by running the following command:

docker logs -f talamancasocial-web-16.2. Change the Reverse Proxy entry to bring the traffic

So we have now the instance up and running but the traffic is not hitting it. We need to log into the Reverse Proxy and forward the traffic here besides the old instance.

-

Log into the Reverse Proxy machine

ssh xavi@dagobah -

Edit the virtual host that manages the traffic to the Mastodon instance, in my case the following:

nano /etc/apache2/sites-available/012-talamanca.social.confThe action here is to change the previous hostname

jakku_oldwhere the traffic was forwarded, to the new onejakku. The configuration finishes like this:<VirtualHost 192.168.1.231:80> ServerName talamanca.social ProxyPreserveHost On ProxyPass / http://jakku:3000/ nocanon ProxyPassReverse / http://jakku:3000/ ProxyRequests Off RewriteEngine on RewriteCond %{SERVER_NAME} =talamanca.social RewriteRule ^ https://%{SERVER_NAME}%{REQUEST_URI} [END,NE,R=permanent] </VirtualHost> <VirtualHost 192.168.1.231:443> ServerName talamanca.social Header always set Content-Security-Policy "default-src 'self'; script-src 'self'; style-src 'self'; font-src 'self';img-src 'self' cdn.devnamic.com data:; media-src 'self' cdn.devnamic.com; connect-src 'self' cdn.devnamic.com" RewriteEngine On RewriteCond %{HTTP:Connection} Upgrade [NC] RewriteCond %{HTTP:Upgrade} websocket [NC] RewriteRule /(.*) ws://jakku:4000/$1 [P,L] RewriteRule ^/system(.*) https://cdn.devnamic.com$1 [L,R=301,NE] ProxyPreserveHost On ProxyPass / http://jakku:3000/ nocanon ProxyPassReverse / http://jakku:3000/ ProxyRequests Off ProxyPassReverseCookiePath / / RequestHeader set X_FORWARDED_PROTO 'https' SSLCertificateFile /etc/letsencrypt/live/talamanca.social/fullchain.pem SSLCertificateKeyFile /etc/letsencrypt/live/talamanca.social/privkey.pem Include /etc/letsencrypt/options-ssl-apache.conf </VirtualHost> -

Save (

control + o) and exit (control + x) -

Now restart the apache service

sudo service apache2 restart

And with this we can see how the docker logs in the jakku machine gets filled with the requests coming to the instance.

Now it's the moment to open our browser and see that the instance is up and running! 🎉

6.3. Build the timelines for the users

We may have identified that the user's timelines are empty. We need to build them.

-

Log into the web container

docker exec -it talamancasocial-web-1 bash -

Run the following command:

RAILS_ENV=production ./bin/tootctl feeds buildIt will take a minute or way more, depending on the amount of users and the size of the instance.

-

Now we can leave the container

exit

7. Wrapping up

Well, I am actually surprised that everything went so straight forward. It was the first time I was backing up and restoring a DB backup from a Mastodon instance. The whole migration took around 4h, including a very slow pace of actions and lot of idle time while I was taking notes, so all in all it could be reduced to 1h if you know what you do and don't document anything.

I am looking forward to see this little new Raspberry Pi 5 behaving in production, by now it feels all smoother and faster.

Next steps for this project is to set up a periodic backup, so I can finally unload my shoulders from the continuous pressure of any catastrophe happening.

8. References

- Mastodon - Backing up your server

- Exporting and Importing Postgres Databases using gzip

- Migrating Mastodon from a Source Install to a Dockerized Install

- How to migrate a dockerized Mastodon to a non-dockerized Mastodon instance

- GitHub docs - Pushing commits to a remote repository

- StackOverflow - Change remote 'origin' to 'upstream' with Git

- How do I check out a remote Git branch?

- Upgrading a docker-compose based Mastodon server to gain today's security fixes

- mastodon-backup

- Backup To Remote Server over SSH