One day a message crossed my #Mastodon timeline: There is a simple #bookmarks #NodeJS application that federates via #ActivityPub as any other #fediverse account! Great, I have to try it. One month later I have my new LinkBlog up and running.

This post explains the installation of #Postmarks and also covers the set up of a customization strategy that allows to keep the project code up to date.

Overview

In this article I go throught the steps to have a Postmarks application up and running in a Raspberry Pi 4 without Docker. For the ones following my articles, it is worth to mention as a difference to my previous projects.

I split the process into the following sections:

-

Requirements and assumptions

-

Structure of the forked environment

-

Set up PostMarks

-

Set up a

systemdservice -

New entry into the Reverse Proxy

-

Wrapping up

1. Requirements and assumptions

As usual in these kind of projects, I assume the following:

-

A Raspberry Pi up and running, following my previous article Spawning a Raspberry Pi 4 with Raspberry Pi OS. It is also up to date by following the recent article Upgrade Raspberry Pi OS from 11 Bullseye to 12 Bookworm.

-

NodeJS needs to be installed in the system, as I explained in Install NodeJS 20 into a Raspberry Pi 4. Postmarks uses the version 16 but I installed the 20 and it's working good.

-

The network has a local DNS server as explained in Quick DNS server on a Raspberry Pi and that's why you won't see IP addresses here.

-

The hostname is

alderaanand the linux user isxavi. You may want to change them for your IP address and your own username. -

The traffic for ports 80 and 443 is forwarded from the router to the Raspberry Pi by a Reverse Proxy, as I explained in Set up a Reverse Proxy in a Raspberry Pi with Apache. The hostname of the Reverse Proxy is

dagobah. -

We should already have a domain or a subdomain set up and pointing to our external IP or using a Dynamic DNS system. This is all up to you. For this article I assume that

linkblog.arnaus.netis setup to forward all traffic to my external IP. -

I assume that you have an account in GitHub, as we are going to fork the Postmarks project there.

2. Structure of the forked environment

As Postmarks appears to be a quite simple application, I wanted to try an approach I got recommended when it comes to customize and publish somebody else's project: don't clone and customize, fork and customize.

2.1. The concept behind fork & customize

As Postmarks is a quite young project, it's receiving lot of attention by the owner and by the rest of the community. Since I started playing with it until now just passed 1,5 months, and it got lot of pull requests merged and gained in stability.

With the clone & customize approach that I use in previous projects like Mastodon or Pixelfed, I rely in releases and I do not pull changes often. This allows me a calmy maintenance and just checkout the new version and run the migration scripts.

With a project that updates so often and doesn't have yet a release, I need a way to deal with fast incoming updates without breaking the environment too much. This why I try here a fork & customize approach with the help of the automated work that GitHub offers.

⚠️ If you do not intend to customize the project's code or styles, a big chunk of this article is not interesting to you. You just want to git clone the code from the original repository and jump directly to the next section where we set it up.

2.2. How this fork structure looks like

We'll rely in a functionality that GitHub provides: Syncing a fork. I would actually recommend you to read the whole Working with forks - GitHub Docs documentation.

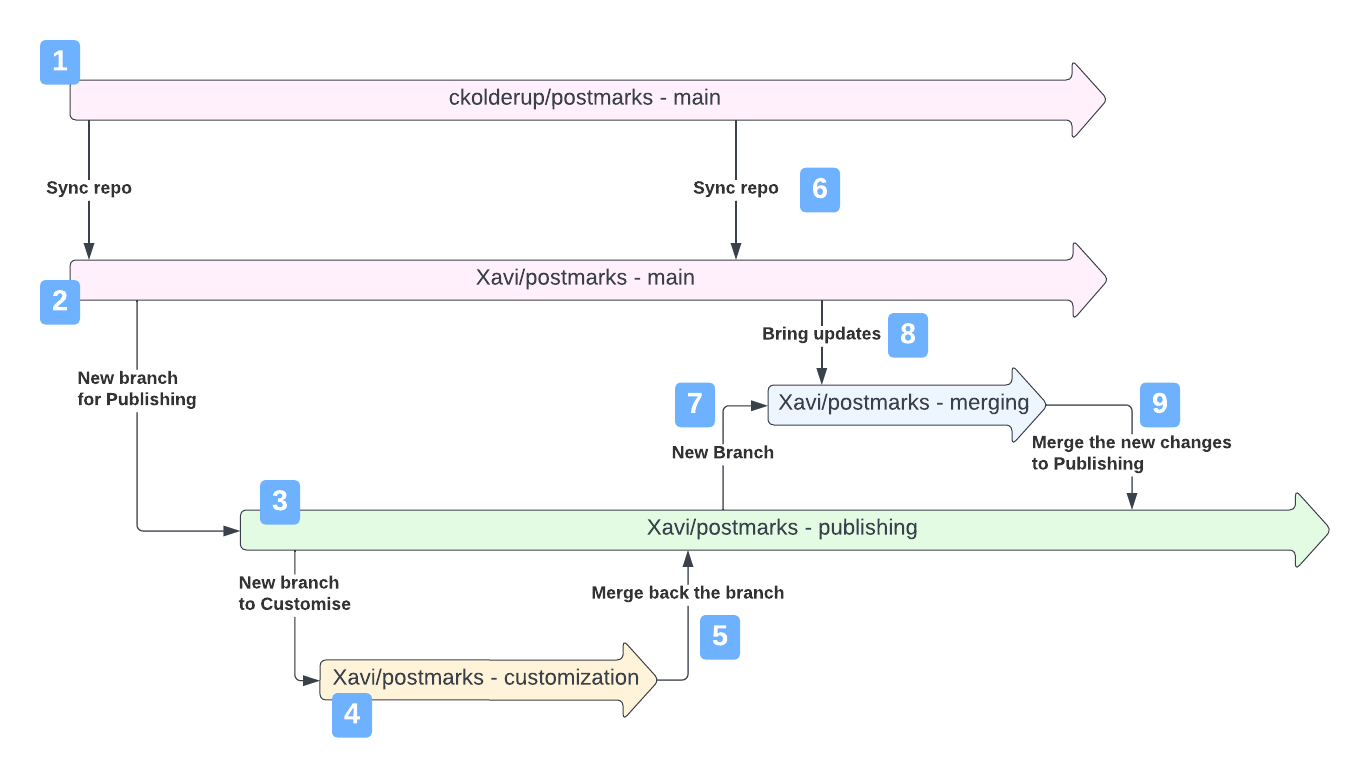

In a nutshell, what we waht to do is to have our main branch a mirror of the original repo (to serve as the incoming route for the original code) and maintain a different branch for publishing (where we have merged the original code with our customized code). Let's take a look at the following diagram:

-

This is the original repository in the

mainbranch. At this point we still don't want to contribute to it, so we want to stay away and do not touch it. -

This is our forked project in the

mainbranch, and should act as a local mirror of the original code. After forking, we'll need to periodically sync it to bring the new code from the original repository. -

We create a new

publishingbranch from our forked repo. This will behave as the branch checked out in the host serving the application. -

We then create a new branch for every

customizationwe want to apply. Most likely we want to do this in our local machine, so we can test the app while the server still is displaying thepubishingbranch. So let's say that our customizations are done and look cool. -

We merge this

customizationbranch into thepublishingbranch. At this point thepublishingwill contain all the original code plus the customizations. Here is when you want to pull the changes from the server and do the actions to have the code ready there, likenpm installand restart the app. -

In the moment that we ideantify that there are new changes in the original repository we'll want to Sync the repos to bring this code to our forked repository.

-

So what we want is to ensure a smooth merge into

publishing, so in our local computer we create a newmergingbranch from it so we can play securely. -

Then we merge the new code from our forked

main. We may have to resolve conflicts and amend broken customisations. Good point is that while this work happens in our local computer, the server still serves the current state of thepublishingbranch, so nobody notices the merging work we do here, it's transparent to the user. -

Now the

mergingis ready, we verified the app locally, and we proceed to merge it into thepublishingbranch. Because the work we did in the previous point, this merge is going to be smooth. Here is when we want togit pullagain from our server checked out branchpublishing,npm installand restart the app to make effective the new code in "production"

So once we saw the main idea, let's go deep into the points:

2.3. Fork the project and get the code

That is pretty simple. Once we're logged in into our GitHub account,

-

Navigate to the Postmark's GitHub repository: https://github.com/ckolderup/postmarks

-

Click the button

Fork, that is placed in the top right area of the screen between the buttonsWatchandStar. It will follow up with some more questions regarding your new forked repository in your account.

Let's say that it got forked into my account, as a repository called XaviArnaus/postmarks. Now we want to clone it in our local system:

-

Open a terminal and move yourself to where you want to have it cloned. In the case of this article, we want it cloned into the Raspberry Pi

alderaan, so we ssh into it.ssh xavi@alderaan cd projects -

Clone the repository. Remember that we want the forked one!

git clone git@github.com:XaviArnaus/postmarks.git

And here we have the forked repository in our local environment.

2.4. Create a branch for publishing

This is an idea that I got from my times of working with GitFlow. The point is to have a branch that is the one that contains the publishing state of the application to serve. Anything else should happen under the hood and only when things are ready we merge the changes into this publishing branch.

Does not really matter what do we do under the hood, if we do super-secure-and-complicated stuff to avoid merging issues to hit "production" or we simply merge everything to this branch as it comes from the original code. The fact is that the tool that GitHib provides merges main to main (by default, can be changed), so we better don't have our main branch polluted with changes that may block the sync from the original repository (unless you like to solve conflicts ninja style).

Therefore, I create a new publish branch where I accummulate my customizations and the updates from the original code, well merged and ready to be published:

git checkout -b publish/linkblog-arnaus-netIt helps a lot to mentally switch to this environment labelling when it comes to branches:

-

main: It is no longer the main branch. It is the "incoming changes from the original source". I will do no changes here nor merge anything that is not original code. -

publish/linkblog-arnaus-net: It is the new main branch. Of course, you can use another name. It will accummulate my customizations and the original code. All branches that change original code should start from here and end up merged here too.

ℹ️ At this point you're actually ready to set up the project and start serving it, to also get to know it and discover what do you want to customize. You can simply pause this section and jump into the next one to set it up. Once you have your instance up and running, you can come back here and customize it.

2.5. Apply customizations

I am not going to go very deep into the customizations themselves. Per definition, they are custom to everybody's taste. In my case, my motivation was to make the style seamless with the rest of my blog, so there is some work on the main layout template, the about page template and the styles css.

My recommendation is to apply software engineering habits, like spawn a new branch from publish (remember, this is our main now) for every feature (styling is a feature) and merge it back to our publish branch.

2.6. And when we get new updates from the original code?

The base idea of all this strategy is that the local repository is the one merging the code from the original repository to our publish branch. This way we control how the merge is done and we can even commit some extra updates before pushing the whole changeset into our forked repository.

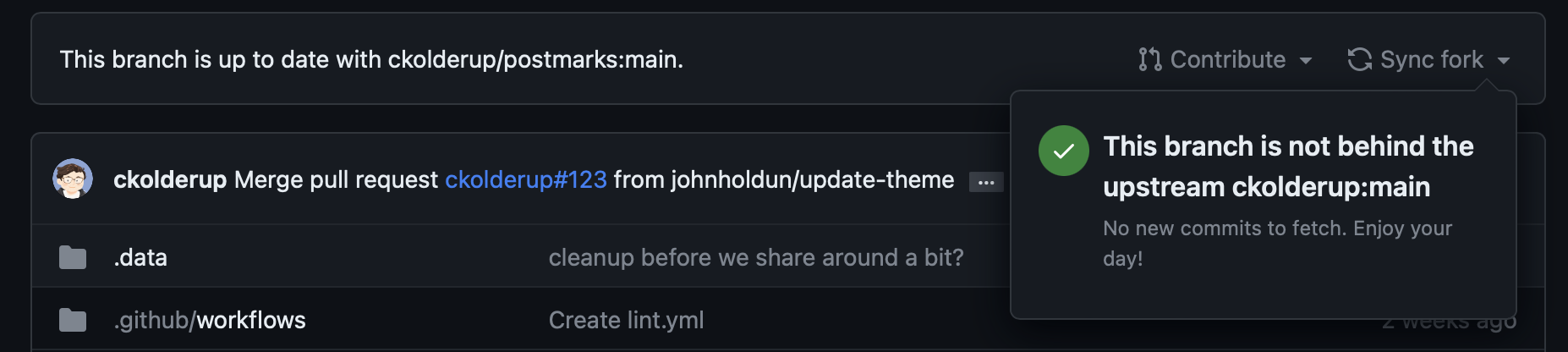

When you visit the GitHub page for your forked repository in your account you'll notice a bar over the files list with a sentence "This branch is up to date with ckolderup/postmarks:main". When this changes, it will be time for syncing the fork.

In this same bar in the right edge there is a Sync fork button that allows us to pull and merge the updates from the original repository's main branch to our forked repository's main branch. And that's why it is important that our main branch is free of changes. When the sync is done we'll have the latest code in our main branch in GitHub.

Once the forked repository has the latest code, it's time to update our local main branch:

-

Checkout to the

mainbranchgit checkout main -

Get the updates from our forked repository

git pull

Now we have our local main branch up to date. We must merge the new changes in main into our publish branch. My recomendation is to not do it directly in the publish branch, but create a new branch from publish, merge the code from main, solve the conflicts, ensure that everything works, and then merge back to publish. Also I recommend that this happens in your local computer, not in the host that serves the application, because the app that is in memory serving the instance relies in a set of files that you'll be changing and this effects the experience of the user. You would prefer to do this work locally and then pull the results from the server.

-

Checkout to the

publishbranch, this way we define the starting branchgit checkout publish/linkblog-arnaus-net -

Create a new branch from it and automatically checkout there. Here is where we'll merge the original code, so that's why a choose a name accordingly.

git checkout -b merging/updates-20230930 -

Now merge here the changes from

maingit merge origin mainIt's very possible that we have some conflicts. Let's simply count on it. At this point we should ask which is the status of the environment

git status... and it will tell us which files are in conflict. For every single one we should open the editor, solve the conflicts, save the file,

git addandgit commit. Common conflict resolving work. Nasty but needed.Let's say we fixed the conflicts. We now should go and see if the app looks good. Most likely we need to:

- Install new dependencies that may come with the new code

npm install- Restart de service to load the changes

sudo service postmarks restart... and hopefully it works straight away. If not, just check the logs and solve the issues.

It can also be that the new code broke some customizations we have. It's time to iterate over them and re-apply them or polish them with the new code, making new commits in this branch towards the final successful state. The goal is to have a working application that contains both the new code and the customizations applied.

Let's say we have now the new code working merged together with the customizations. Remember, we're still in a

mergingbranch that was spawned from ourpublish. Having the code working here is guarantee that the merge back into thepublishbranch won't be an issue. So: -

Checkout the

publishbranchgit checkout publish/linkblog-arnaus-net -

Merge the work done in the

merging updatesbranchgit merge origin merging/updates-20230930Now we have the

publishbranch updated and fully working with our customizations. -

Push the changes to our forked repository

git pushWhy? Because all this work was to merge our custom state with the new changes, and we want to keep this state. If our host explodes, having the code outside the machine will allow us to quickly spawn another host and have it up and running in minimum time (yeah, counting on a backup of the data, because you do backups, right?)

-

Restart the service. Yes it is up and running, but what we have loaded in memory is generated out of the code from the

mergin updatesbranch, not thepublish. Technically is the same, but better we are sure.sudo service postmarks restart

At this point we're updated and maintaining our customizations. Great, if we did this in our local computer, it's time to get into the server (assuming that is already checked out into the publish/linkblog-arnaus-net branch) and:

-

Pull the changes that we merged recently

git pull -

Install new dependencies that may come with the new code

npm install -

Restart de service to load the changes

sudo service postmarks restart

Yay! we have it!

Why did we do so much work with branches? I could merge directly to the publish without creating a merging updates branch!

Yes, but then you can't step back and return to a safe previous state. Keeping the publish branch untouched and doing the work in a different branch allows us to mess up all what we want there, as publish will have our valuable customizations and main will always have the original code.

3. Set up Postmarks

So we know that:

-

We have the subdomain

linkblog.arnaus.netthat will be used to serve Postmarks. -

The Reverse Proxy will handle the SSL connection and certificates

-

We will NOT use Glitch for anything here.

So, assuming you're already ssh'ed into the Raspberry Pi and placed yourself into the repository directory, we'll check what the README of the project says:

-

Create an

.envin the root of the projectnano .env -

Add a

PUBLIC_BASE_URLvar that defines the host we'll use for publishingPUBLIC_BASE_URL=linkblog.arnaus.net -

Add a

ADMIN_KEYvar that defines the password to enter into the Admin areaADMIN_KEY=my-super-secure-password -

Add a

SESSION_SECRETvar that defines the salt string used to generate the session cookieSESSION_SECRET=thisismysessionsecretstring123wowsosecure -

[Optional] In case you'd like to have registered all accesses to the application, useful to debug your connection to the Reverse Proxy, add a

LOGGING_ENABLEDvarLOGGING_ENABLED=true -

Save (

ctrl+o) and exit (ctrl+x) -

Create a new

account.jsonout of the distributedaccount.json.examplecp account.json.example account.json -

Edit this

account.jsonnano account.json -

Update the values there:

-

usernameis the account part that will be used as the actor in the fediverse. For example in this article, to havexavi@linkblog.arnaus.netwe need to set this value toxavi -

avataris an URL to an image already public in internet that will be used as this user's avatar in the fediverse and also will be displayed in theAboutsection. You most likely want to upload a PNG wherever you have access to and point this value to there. -

displayNameis the name of the application, and will be displayed as the site name -

descriptionis a text that will appear in theAboutsection and also as a bio of the fediverse user.

In my case I ended up with a file content similar to the following:

{ "username": "xavi", "avatar": "https://xavier.arnaus.net/favicon.png", "displayName": "LinkBlog", "description": "This is my LinkBlog" } -

-

Save (

ctrl+o) and exit (ctrl+x) -

Now it's time to let

npmto install all dependenciesnpm install

With this, the application should be ready to be started.

4. Set up a systemd service

As this is a NodeJS application, we just need to trigger npm run start, the app will start and become ready to serve if no errors were found.

This is ok for developing and testing purposes but then this terminal session needs to be kept alive constantly otherwise the application is terminated with the session.

We have several options, like running the app in a session screen, running it in background or making a systemd service that manages it. Let's do this last options, as integrates pretty well with the rest of the services we have in the Raspberry Pi.

-

Create a new file that will handle the configuration for the postmarks service:

sudo nano /etc/systemd/system/postmarks.service -

Add the following content:

[Unit] Description=Postmarks [Service] ExecStart=/usr/bin/node /home/xavi/projects/postmarks/server.js Restart=always # sqlite fails. It needs as user and group the common RaspberryPi user #Usee=nobody #Group=nogroup User=xavi Group=xavi Environment=PATH=/usr/bin:/usr/local/bin # Don't make it "production", so allows the Reverse Proxy to forward through no-SSL #Environment=NODE_ENV=production #Environment=ENVIRONMENT=production WorkingDirectory=/home/xavi/projects/postmarks [Install] WantedBy=multi-user.targetSome considerations here:

-

ExecStartneeds to have the full path to the node binary AND to the server Javascript file -

UserandGroupneeds to be set up to the common one in the RaspberryPi,xaviin my case. You don't want to make itrootand withnobody/nogroupsqlite fails to start. (If anybody can improve this, I'm open for suggestions) -

If you don't set up a Reverse Proxy (that comes in the next section) and this Raspberry Pi receives the traffic directly, you most likely will handle here the SSL certificates and therefore this app will handle HTTPS directly. Then, activate the

NODE_ENVor theENVIRONMENTvars to "production". Otherwise (as in my case), I want that the communication between the Reverse Proxy and this host happens unencrypted and therefore I don't activate the "production" Environment. The "production"" environment just makes less logs and ensures SSL-only traffic. -

WorkingDirectoryshould point to the directory where we have the application code.

-

-

Save (

ctrl+o) and exit (ctrl+x)

At this point you should be able to start the application by typing

sudo service postmarks start... and also stopping the service with

sudo service postmarks stopYou can also know in which state the service is with

sudo service postmarks statusAnd finally, not less important, just to let you know that the logs are in /var/log/syslog and can be followed (use tail -f) or seen (use cat) with

tail -f /var/log/syslog | grep postmarks5. New entry into the Reverse Proxy

Now we need to forward traffic into our Raspberry Pi that hosts the Postmarks application. Remember that this is because in my case I have all projects behind a Reverse Proxy and also set up that all domains and subdomains are delivering the traffic to my external IP, and then the router is forwarding all traffic coming from the port 80 and 443 into the Reverse Proxy, and it's him who distributes the traffic to each app.

One more time, if you don't have a Reverse Proxy and are receiving the traffic from your router directly to the Raspberry Pi that hosts the application, most likely you only need to set up the SSL and that would be it. Just keep in mind that this NodeJS application runs in port 3000, so you want to setup a NAT rule in your router saying that the incoming port 80 and 443 traffic should go to this Raspberry Pi to the port 3000.

5.1. Add a new site in our Reverse Proxy

As we have the Reverse Proxy in another host, let's

-

First of all ssh into

dagobahssh xavi@dagobah -

Move into the Apache virtual hosts directory

cd /etc/apache2/sites-available -

Create and edit a new virtual host file

sudo nano postmarks-linkblog.arnaus.net.conf -

Add the following content

<VirtualHost *:80> ServerName linkblog.arnaus.net ProxyPreserveHost On ProxyPass / http://alderaan:3000/ nocanon ProxyPassReverse / http://alderaan:3000/ ProxyRequests Off </VirtualHost> <VirtualHost *:443> ServerName linkblog.arnaus.net ProxyPreserveHost On ProxyPass / http://alderaan:3000/ nocanon ProxyPassReverse / http://alderaan:3000/ ProxyRequests Off </VirtualHost>What we're doing here is to set up 2 virtual host, one for the port 80 and another for the port 443, and both forwarding to

alderaanwithout SSL into the port `3000, with the standard configuration on the proxy action.They are both the same as in the next step I'm going to generate a certificate with the

certbotand the plugin for apache, and if there is already a virtualhost for the 443 already created it does not create a different file for it, which I prefer.So in case you don't want to use SSL you can avoid the virtual host for the port 443, but I highly discourage it.

-

Save (

ctrl+o) and exit (ctrl+x) -

Activate these virtual hosts

sudo a2ensite postmarks-linkblog.arnaus.net.conf -

Restart the Apache2 service for the changes to take effect

sudo service apache2 restart

At this point we should be able to go the browser and navigate to http://linkblog.arnaus.net (note the use of HTTP, no SSL) and we should have the application answering.

5.2. Add the SSL certificates

We'll make use of the certbot from Let's Encrypt. Staying in the same dagobah machine, I have it already installed from previous projects, but if you need to install it just check out the Set up a Reverse Proxy in a Raspberry Pi with Apache article, where I explain it in detail in the section Set up the SSL handling in the reverse proxy host. It is worth to mention to install the Apache module so that the certbot command can already edit apache virtual host files.

So, to generate a new certificate specifically for this subdomain:

sudo certbot --apache -d linkblog.arnaus.netThe command will stop the Apache2, do a validation challenge, update the files in case everything was correct and reactivate the Apache2.

Once it finishes, we can check what is the current state of our virtual host file

-

Show the content of the file

cat postmarks-linkblog.arnaus.net.confAnd it should show something like the following (I added here some tabbing to show it prettier)

<VirtualHost *:80> ServerName linkblog.arnaus.net ProxyPreserveHost On ProxyPass / http://alderaan:3000/ nocanon ProxyPassReverse / http://alderaan:3000/ ProxyRequests Off RewriteEngine on RewriteCond %{SERVER_NAME} =linkblog.arnaus.net RewriteRule ^ https://%{SERVER_NAME}%{REQUEST_URI} [END,NE,R=permanent] </VirtualHost> <VirtualHost *:443> ServerName linkblog.arnaus.net ProxyPreserveHost On ProxyPass / http://alderaan:3000/ nocanon ProxyPassReverse / http://alderaan:3000/ ProxyRequests Off SSLCertificateFile /etc/letsencrypt/live/linkblog.arnaus.net/fullchain.pem SSLCertificateKeyFile /etc/letsencrypt/live/linkblog.arnaus.net/privkey.pem Include /etc/letsencrypt/options-ssl-apache.conf </VirtualHost>As you see, the

certbotdid 3 main actions:-

It generates certificates and stores them in our system

-

It adds a redirection rule into the port 80 virtual host to redirect to the port 443 virtual host

-

It adds the definition of the certification into the port 443 virtual host

-

-

I usually add also one directive into the port 443 virtual host to let the cookies to be proxied as well, by adding the following line at the end of the definition, just before closing the section:

ProxyPassReverseCookiePath / /

6. Wrapping up

So we have a custom instance of Postmarks up and running, behind a Reverse Proxy, federating around and under a nice set up that allows us to customize and to keep the code up to date.

As I mentioned in the article, the whole process feels to be long due to the customizations we want to apply and keep, so in case that we're not interested in such a customization, just cloning the original repository should almost eliminate the whole section 2.

As usual, don't hesitate to contact me for any clarification! Happy coding!